Defining Gesture Interaction

Interacting with in-car controls requires drivers to divert visual attention from the road to the dashboard. For example, adjusting the climate controls, changing the music, or entering a destination in the navigation system. Ideally, these moments are minimized to reduce driver distraction. Research into multi-modal interaction shows how this is possible by distributing information to non-visual modalities.

Gestures require no visual attention or hand-eye coordination. If gestures replace some of the in-car controls, we can assume that it may have a positive effect on driver distraction. Surprisingly, there is not a lot of public research on the effects of using gesture interaction on driving safety. The limited research that does exist is positive but it is not enough to confidently say that gesture interaction reduces driver distraction.

Another assumption is that it may be less demanding than other non-visual alternatives, like voice interaction. Despite cultural differences, hand gestures are innate to human communication. Most of us use hand gestures in our daily communication without thinking about it. If this can be leveraged for in-car use, it would require much less cognitive load than speech-based interaction.

Types of Gestures

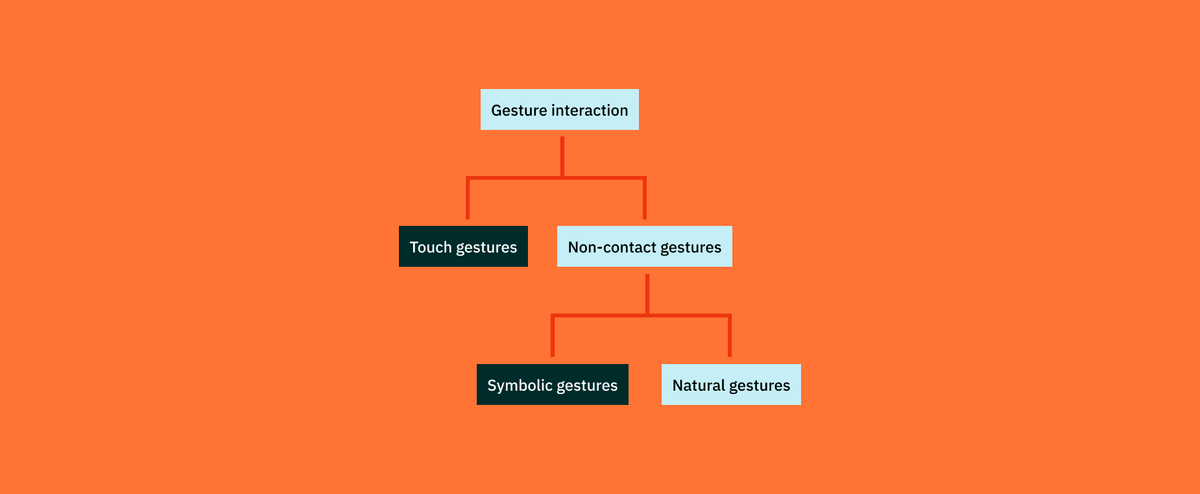

Let's start by defining what do we mean by gesture interaction. It can be divided into two groups: touch gestures, which I previously experimented with, and air gestures. This post concerns the latter.

And even the subset of air gestures is too broad. For example, sign language could fall under air gestures. In theory, we could teach drivers sign language to interact with the car. But as you may be thinking right now, teaching drivers sign language is not very user-friendly. Instead, the goal is to get away from pre-defined interaction techniques. If the interaction with the car is like natural conversation, the cognitive load would be greatly reduced. Therefore, the specific subset of gestures that will be discussed is natural air gestures.

It is hard to imagine right now what natural air gestures are. Carl A. Pickering et al have classified air gestures into five categories for in-car use:

Function Associated Gestures

A function-associated gesture pairs a function to a natural action of the hand or arm. For example, moving your hand front to back close to the sunroof could signify opening the sunroof of the car.

Pre-emptive Gestures

These gestures occur when the system detects the driver's hand when it is close to a control and can infer the required action. For example, moving your hand close to the interior light can turn the light on without the driver having to press a button.

Context-Sensitive Gestures

These are natural gestures that are used to respond to a specific event or prompt. For example, a thumbs-up or thumbs-down to reply to a message or accept a call.

Natural Dialogue Gestures

As the name suggests, these occur when the driver uses common communication gestures to interact with the vehicle. For example, when drivers do a fanning gesture, the system can lower the temperature.

Global Shortcut Gestures

These gestures are custom movements that are mapped to a specific function and that can be used at any time. For example, BMW uses the following gesture as a configurable shortcut.

Mapping

How the gestures are mapped to the in-car controls is also crucial to the user experience. Some important questions arise when thinking of gesture mapping: should gestures supplement or replace existing interactions? And how many gesture commands can be used?

It becomes more difficult to remember the gestural command set as the number of gestures increases. If we were to replace all interior controls with gestures today, it is estimated that it would require a set of 300 to 700 different gestures. Simply replacing the current structure is therefore not realistic. However, current research recommends using a small set of natural hand gestures to supplement interior controls.

To figure out exactly which set of controls, we can turn again to Carl A. Pickering et al who have created a classification:

By Control Type

Gestures can simply be mapped to a button or switch. It would then control the underlying functionalities of that particular button. For example, a thumbs-up could be mapped to the 'accept call' button.

By Theme

Instead of a specific button, gestures could be mapped to a set of controls of a certain theme. For example, the lighting in the car, or for opening/closing various parts in the interior such as the windows.

By Function

Slightly different from the first option, a gesture can also be mapped to the underlying function instead of an existing button or switch. For example, you could map a gesture to a function that normally requires multiple interactions, like playing a specific playlist on Spotify.

Now that we have defined the types of natural air gestures and the possible ways to map them, let's look at two main areas to look out for when creating a gestural interface.

The Two Main Challenges of Air Gestures

Discoverability

As with most novel interaction methods, discoverability is a big challenge. Most new cars use touch interfaces. There are few people buying a new car who are not in daily contact with touch interfaces. Therefore, when presented with a new touch interface, as long as it uses the same principles as other popular interfaces, drivers can rely on their mental models of touch interfaces to learn the new system.

Aside from niche applications like gaming and virtual reality, gesture interaction is not common. There are no widely adopted standards or heuristics when it comes to air gestures. Any person who enters a car equipped with gesture interaction will have to learn to interact with it from scratch.

On top of that, an air gesture interface is hidden. Physical and touch-based interfaces use signifiers to communicate what elements are interactive and to infer what type of interaction is necessary.

Air gestures lack these signifiers. A driver needs to know what to do before performing a gesture.

Other applications that use gesture control have visual indicators explaining to new users how to interact. Below is an example from Xbox Kinect.

Visual cues can be a valid alternative to teach drivers how to interact with the air gesture system. Introducing visual instructions could somewhat defeat the purpose of avoiding driver distraction. Though, it could be implemented as an initial tutorial that can be removed or ignored once the driver has mastered the system.

Feedback

The second difficult problem to solve is feedback. In a perfect use case, a gesture detection system has 100% accuracy. In practice, this is not possible. When performing an air gesture, the system needs to inform the driver that it has detected the gesture, classified it correctly, and communicate the result back to the driver. Current research describes three different viable ways to do this.

Visual Feedback

Generally in cars, most feedback is given via displays or sound. For air gestures, the visual channel is sub-optimal as it is the primary channel used for the driving task. Doing something similar would take away the advantage of gestures having no visual mental load. However, there are other ways of providing visual feedback that may be less distracting. There is promising research about using peripheral vision.

Several experiments around peripheral feedback in driving context show positive results in reducing driver distraction and user acceptance, even tailored to gesture interaction. An example of this type of feedback that is already in use today is blind-spot detection systems that are embedded in side mirrors.

Auditory Feedback

Auditory feedback is used a lot in cars today. Every modern car comes with a wide range of different beeps and sounds to warn drivers. It could be an option for gesture interaction as well. The difficulty is that there seems to be no consensus on whether it improves eyes-off-the-road time compared to visual feedback. Where visual feedback does perform better is in executing the gestures. Experiments have shown that drivers make more mistakes, and correct them less often when using non-visual feedback.

Haptic Feedback

Another promising alternative is haptic feedback. Despite not touching any surface when performing air gestures, there is a way to provide haptic feedback. Ultrasound haptics uses acoustic radiation pressure to create areas of friction on the skin. This makes it possible to make drivers 'feel' as if they are pressing physical buttons. Technologically, it is the most advanced but a concept by BMW shows great promise. BMW presented the i Inside Future concept in 2017 which showed off haptic feedback for gestures.

For now, it seems only possible to substitute physical buttons which highly limits the scope of the gestures that can be used. In the future, however, this technology can be extended to provide more elaborate feedback that supports a wider range of gestures.

Should Car Companies Introduce Gesture Interaction?

Today, air gestures are a niche technology. Most drivers will encounter it for the first time when they get in a car which has it. Therefore, it comes with core challenges such as dealing with discoverability and feedback.

A multi-modal interface has a positive effect on driving safety. But even though research surely seems to point in the right direction, there is no consensus whether gesture interaction is better than other interaction modes such as voice interaction.

Creating an air gesture-based interface comes with a lot of possibilities for potential mappings and a gesture vocabulary. But the real limiting factor is likely not the technology, but user acceptance.

The rate of user acceptance is driven by how widely adopted gesture interaction is in other application areas. Until we interact daily in our home and work lives via air gestures, it is unlikely that it will become an accepted interaction mode in cars. It may be the reason why BMW seems to have dropped their gesture control system from their latest high-end models.

On the other hand, the world of VR/AR is a rapidly growing area that is invested in gesture interaction. Next to that, car companies can play a role in the adoption of gesture control by jumping on new trends and slowly introducing it in their cars. For example by introducing interaction via pre-emptive gestures and building out the gesture vocabulary over time. Until then, gestures remain more of a gimmick than a valid interaction mode.

Get notified of new posts

Don't worry, I won't spam you with anything else